SpotFinder

About

SpotFinder is an Android application that guides users to the closest empty parking spot in a parking lot or structure in order to reduce time spent looking for a place to park.

Technologies Used

- Java - to code the Android app

- Firebase - to store spot information in a real-time non-relational format

- Raspberry Pi - to read sensor data and update the database

- Node JS - to push and pull data from the cloud (used in an earlier version of the app, before I decided on using Firebase)

App workflow

- The app accesses the phone's location as soon as the user opens the app and auto selects the parking structure in the database closest to that location. In the rare case that there are multiple structures in close proximity and the app chooses the wrong one, the user has the option on the main screen to select the structure they are actually in.

- The user is presented with a map of the structure along with a selection of the exits for them to choose which one to park closest to. When the user selects an exit, the app shows them the three closest empty spots to that exit, along with the walking distance from each spot to the selected exit.

- The user can select any of the three spots to park in, clicking the corresponding button to let the app know which one they chose.

-

The app's database then registers that spot as having been reserved by the user, and removes it from the list of vacant spots.

If the user does not park in the spot within a few minutes, the reservation is lifted and the spot is listed as vacant once again, allowing other users to park there. - Later, when the user returns to the parking structure and is ready to leave, they can select the option on the app's main screen that shows them which spot they parked in, allowing them to return to their car as quickly as possible.

Technical Specifics

-

Detecting empty spots

- IR breakbeam sensors were installed across every spot on a scale model I built to demonstrate the app in real time. When a spot was filled, the beam was tripped and the spot was registered as full.

- To prevent spots from being mischaracterized by people or animals accidentally breaking the beam, there is a three second counter on the beam, and only if it is broken for three seconds does the spot's status change.

-

Data

- The data was stored in a real time non-relational NoSQL Firebase database, with static variables such as the exits, spots, and the distances between them, and dynamic variables such as the status of each spot.

- Each entry in the database was a parking structure, and each entry within a parking structure was an exit, with distances to each of the spots as the values for that exit.

- Using a non-relational database allowed the retrieval of data as a JSON object, which resembles a map or dictionary, so lookup for a specific exit was O(1) time, and looking through the spots was O(n) time.

- Since the spots were organized in order of increasing distance, the number of spots searched was a linear function of how busy the parking structure was.

- For security purposes, the database was protected and required a Google sign in to access, which meant that users were required to sign in to their Google accounts to use the app, since selecting and reserving spots in the app requires reading from and writing to the database.

Joint Motion

About

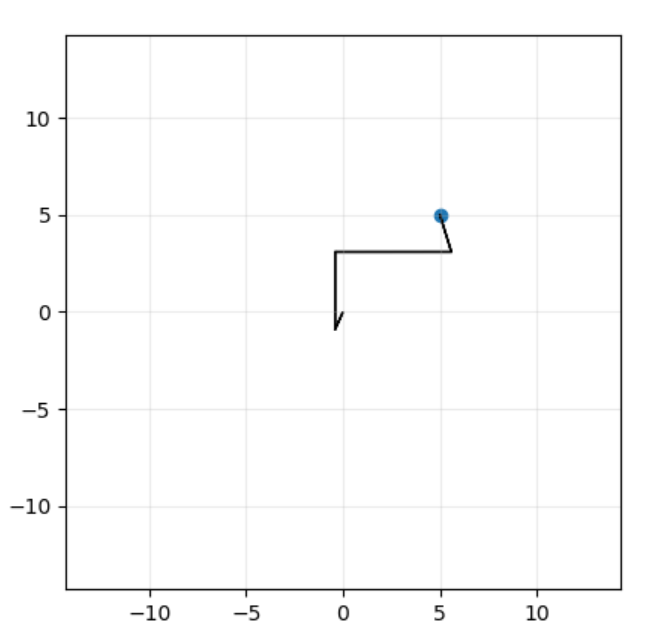

Joint Motion is a different take on pathfinding using machine learning to get a multi-jointed arm to reach a specified target point. The arm, which the user can define by specifying the number of segments and the length of each segment, searches the state space using binary search, aligning itself to the position that minimizes the distance from its tip to the target point. Inspired by the arm on our robot in high school, this simulation is applicable to situations where an arm is tasked with reaching a specific point in space.

Technologies Used

Python - used to code the whole simulation, chosen for ease of use and accessibility to numpy and matplotlib modules

Technical Specifics

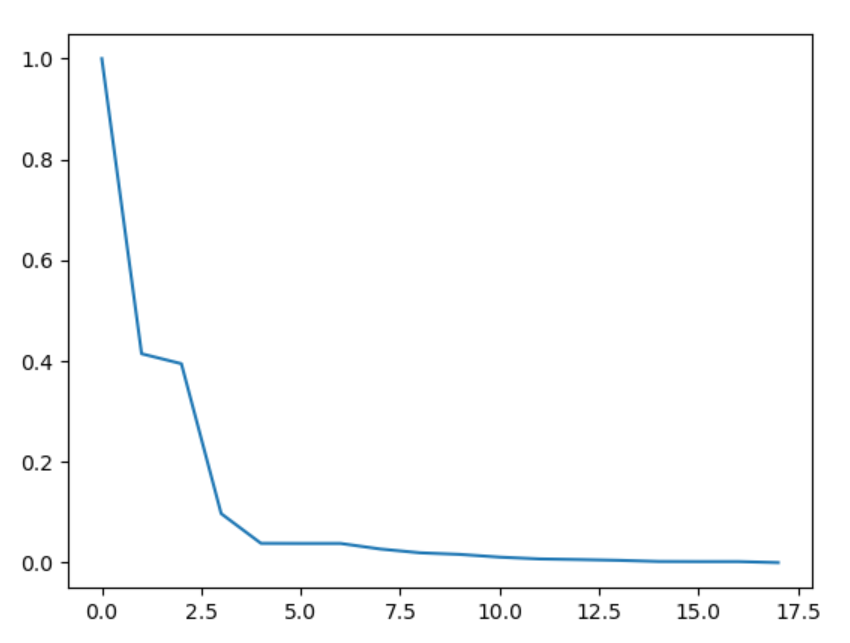

Error calculation - The error is the Euclidean distance between the tip of the arm and the target point. Each arm segment is modeled as a vector to simplify the calculations of the endpoint of each segment, the movement of all segments attached to any given segment, and the tip of the arm in space. The arm originates at the origin to simplify the numbers, and a graph of iterations vs errors is shown once the arm is in place.

Binary search - At each iteration, each segment of the arm rotates a certain number of degrees about its fulcrum, taking all subsequent segments along with it, and the tip position is noted, and the error is calculated. The configuration with the minimum error is stored, the arm goes to that position, and the next iteration starts from there. The angle of rotation is halved at each iteration, starting from 180 degrees at the start. The search stops when either the error has been 0 for three iterations or when the maximum number of iterations is reached.

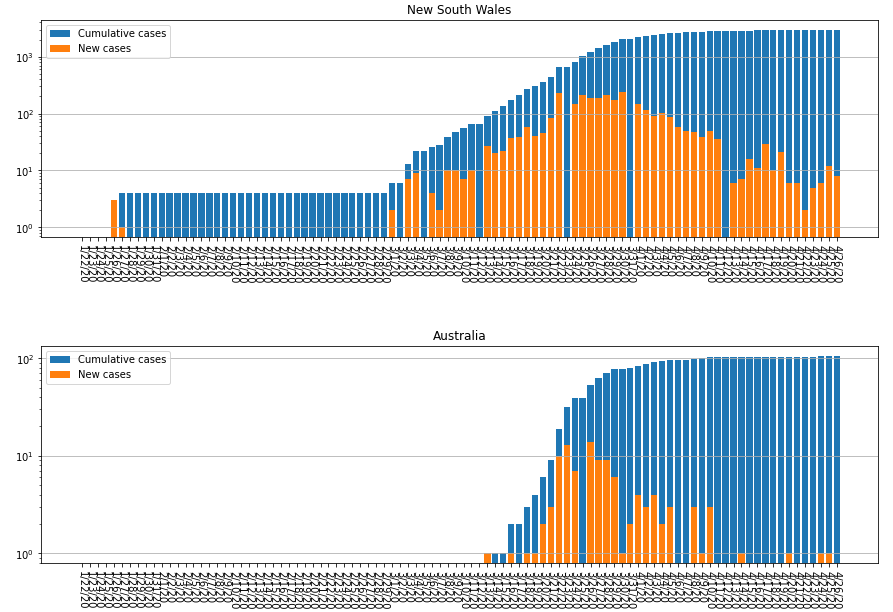

COVID-19 Prediction

About

This project was a submission to the Covid-19 Forecasting Challenge set up by Kaggle in the early days of the lockdown to crowdsource predictions about the rise of cases and deaths in different regions, hoping to inform politicians and healthcare workers about the numbers they might be dealing with.

Technologies Used

- Python - used to code the whole solution

- pandas - to read, format, and parse input csv data

- scipy - to fit to logistic equations using optimizers

- sklearn - to create and train regression models

- matplotlib - to display results

Technical Specifics

-

Data preparation

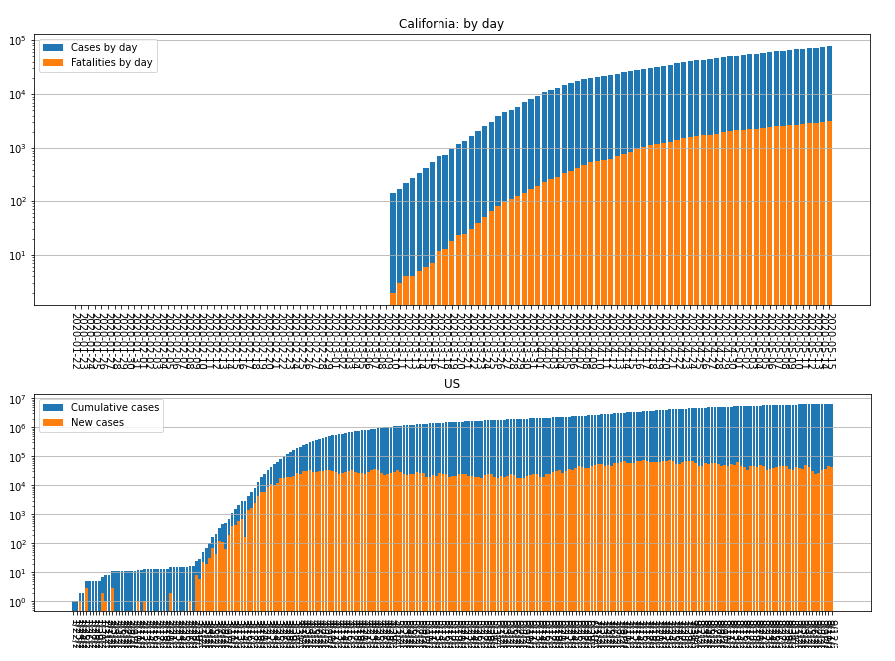

- Kaggle provided the dataset to be used, which consisted of cases and deaths per day for hundreds of countries/regions around the world, lumped under the novel-corona-virus-2019-dataset.

- I prepared the data by extracting the dates and aligning entries across multiple csv files. In certain cases, data was not available for certain areas for certain dates, either due to a delay in starting record-keeping or because of an error in reporting the data. By aligning the entries by date, I was able to avoid major problems from these gaps in the data.

- I then graphed all the data that had been collected so far for the region of interest, and passed that data to the four different prediction models I had set up.

-

Prediction models

- A logistic regression model using sklearn's linear_model.LogisticRegression library

- A curve fit to a logistic equation using scipy's optimize.curve_fit optimizer

- A least-squares fit to a logistic equation using scipy's optimize.least_squares optimizer

- A multi-layer perceptron regression using sklearn's neural_network.MLPRegressor library

While best-fit scores and prediction accuracies varied between data sets, the MLP regressor tended to have the best fit to existing data and be the most accurate when compared against new case data days later.

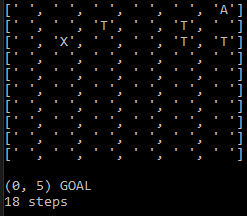

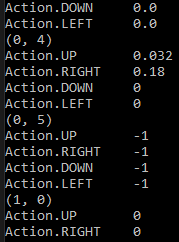

RL Gridworld

About

RL Gridworld is an experiment in reinforcement learning, applied to the traditional gridworld environment, having the agent learn its way to the goal while avoiding traps placed randomly throughout the grid.

Technologies Used

- Python - used to code the whole simulation

Technical Specifics

-

Agent

- The agent learns with the q-learning method, an off-policy algorithm that catalogues the value function for each state-action pair, allowing the agent to take the optimal action at each state without a predefined policy.

- The grid is also "windy" so the agent is sometimes blown off-course from its intended action, forcing the agent to figure out its way to the goal from its new position, which is made easier by the cataloguing of each state-action pair.

-

Q-Learning

- The agent starts off in a random location on the board, and from there takes a random action, recording that action and the reward it gets after taking the action.

- For most squares on the board, the reward is 0, with exceptions for traps and the goal, which are -1 and +1 respectively, forcing the agent to keep exploring the board to maximize its reward.

- The agent keeps exploring random actions until it arrives at the goal, at which point it has documented one path from its starting position to the goal by recording the state-action pairs and the probabilities with which it should take each action from a given state.

- The agent has a certain number of iterations, n, to learn the board, and if it reaches the goal in fewer than n iterations, it starts over at its original starting point, exploring the board using its newfound knowledge of the rewards.

- To prevent stagnation into the information it has learned, the agent chooses a random action with a probability ε in the hopes of finding a better path to the goal than what it already found.

- After n iterations the agent demonstrates what it has learned by starting from its original starting position and navigating to the goal.

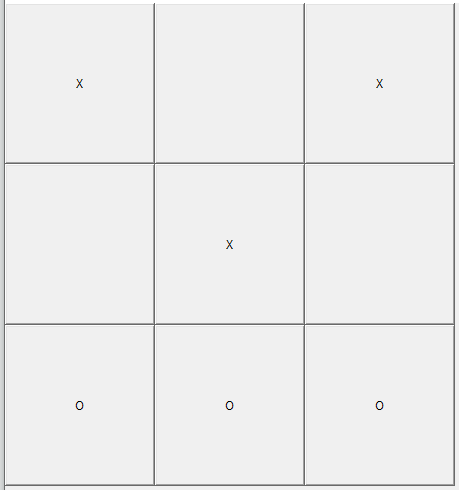

Tic Tac Toe

About

Tic Tac Toe is an implementation of the classic game, with both the traditional player-vs-player version and a player-vs-computer version. The PvP version simply alternates between X and O, ending the game when the board is full or there is a winner. The exciting version is obviously the PvC, which always either wins or draws the match, never losing to a simpleton human player. It achieves this by predicting the human player's next move and blocking or one-upping that move.

Technologies Used

Python - used to code the whole game, chosen for ease of use

Technical Specifics

Predicting the player's move - The computer models the player's next move as a probability distribution over the nine squares, corresponding to what it thinks are the probabilities of the player placing in each square. Each prediction begins with a uniform distribution with a probability score of 1 for every square. The computer then looks for any two squares in a line that are occupied by the player and adds 1 to the probability score of the third square in those lines, indicating that the player is most likely to place in that third square to complete the line. Then, it checks for any two squares in a line separated by a gap and adds 0.5 to the probability score of that gap square, indicating that the player is likely going to place in the gap square, but with not as much likelihood as the previous check. The squares that are already filled are then given a probability score of 0, preventing them from being considered for the next move. The probability distribution is then normalized, and the computer now has a heatmap of where the player is likely to place their next move.

Choosing the next best move - The computer models its next move as a probability distribution just like its prediction of the player's next move, again starting with a uniform distribution.

It first tries to get the center square if it is still empty, since it is a powerful square to control, setting its probability score to 2.

It then looks for any two squares that it controls in a line with an empty third square in their line, and adds 1 to the probability score of that third square, indicating that it wants to fill that third square and complete the line.

Then, it checks for any single square it controls that has two empty squares in a line in any direction, and adds 0.5 to the probability score of the neighboring squares in those directions, indicating that it would like to fill that neighboring square and setup a line it can complete.

The computer then normalizes its probability distribution, and is now left with two distributions, one for the human player's next move and one for its own next move, with the highest probability square in each one representing each player's optimal next move.

By adding those two distributions together and taking the argmax over the positions of the square, the computer determines where it should place next, which is the probabilistic combination of the square the human player is most likely to move to, and therefore the computer should block, and the optimal square for the computer regardless of the human player's move.

Balls

About

Balls is a physics simulation of balls subject to gravity and placed in an environment with obstacles that block their fall. The balls bounce and roll realistically, taking into account their different sizes, masses, and elasticities.

Technologies Used

- Python - used to code the whole simulation

- numpy - to model vectors and run calculations on them

- matplotlib - to animate and display the motion of the balls

Technical Specifics

-

Movement

- To make the motion as realistic as possible, gravity is modeled as a force, which when divided by the mass of the ball, gives the acceleration on the ball.

- The velocity of the ball is calculated as \(v = v_{prev} + a \Delta t\), with \(\Delta t = 0.01s\). The integration can be made more accurate by reducing the timestep, but this comes at the expense of slower overall movement since the distance traveled between each refresh of the window is smaller.

- The position is then calculated as \(y = y_{prev} + v \Delta t + \frac{1}{2} a (\Delta t)^2\), again with \(\Delta t = 0.01s\), and the ball is redrawn at its new position.

-

Bouncing and rolling

- Keeping with the theme of realism, impacts with obstacles are modeled using the equation for impulse, \(\Delta p = m \Delta v\).

- The instantaneous force on the ball is then calculated as \(F = \frac{\Delta p}{\Delta t} = \frac{m \Delta v}{\Delta t}\), is scaled by the elasticity of the ball, and is vectorally added to the net force on the ball, causing it to accelerate perpendicular to the obstacle at the point of impact.

- Rolling is currently modeled as sliding, with the net force set by vectorally adding the obstacle's normal force with the ever-present force of gravity. To allow for both rolling and bouncing, a velocity threshold is set at 2, so if the ball's perpendicular velocity to the obstacle is greater than 2, the ball will bounce, and if it is less than 2, the ball will roll.

A future modification could be to model rolling more realistically with rotational mechanics, although the simulation is already convincingly realistic.

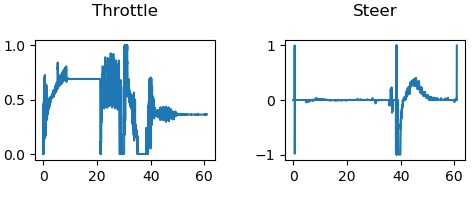

Self Driving Car

About

This was the final project for the Self Driving Cars Specialization on Coursera. The vehicle drives autonomously, maintaining a safe distance from the lead car and avoiding obstacles as it plans a path between two endpoints on a map in the Carla simulation environment.

Technologies Used

- Python - used to code the whole project

- numpy - to perform matrix calculations

- scipy - to pick the optimal path at each iteration

- carla - to interact with the Carla simulation environment

- matplotlib - to visualize the trajectory and control outputs

Technical Specifics

The vehicle implements a multi-part motion planner, with a collision checker for path planning, a state machine for behavior planning, and dynamic motion models for velocity planning.

-

Path planning

- At every location, the car plans its path up to a lookahead distance. Between itself and that lookahead distance, the motion planner generates 9 laterally offset paths, each a preset distance away from the next. This allows the car to explore multiple paths around obstacles, not getting stuck when a large obstacle blocks its currently planned path.

- The car is modeled as three overlapping circles oriented longitudinally, and is tracked along each proposed laterally offset path segment, checking for obstacles coming within the circles. A path segment is deemed collision-free if the center of the center circle reaches the end of the path with no obstacles entering any circle at any point along the segment.

- The collision-free paths are then sorted according to their deviations from the nominal path, defined as the cumulative distance between each point on the path and its corresponding (\(x, y, \theta, v\)) waypoint. The collision-free path with the smallest accumulated distance from the nominal path is selected as the best path to follow.

-

Behavior planning

- The state machine behavior planner defines three states for the car: FOLLOW_LANE, DECELERATE_TO_STOP, and STAY_STOPPED.

- FOLLOW_LANE is the nominal state, allowing the path and velocity planners to function uninterrupted, and is used when driving on open road.

- When the behavior planner identifies a stopping point in the planned path segment (stop sign, traffic light, stopped lead car) it transitions to the DECELERATE_TO_STOP state, which sets the target velocity for the velocity planner to 0, forcing it to decelerate to a full stop. If while decelerating, it decides it no longer needs to decelerate (stopped lead car starts again), the state machine transitions back to FOLLOW_LANE.

- When the car reaches 0 velocity in the DECELERATE_TO_STOP state, the state machine transitions to STAY_STOPPED, which keeps the target velocity at 0 for a preset amount of time. Once the time expires, the behavior planner transitions back to the FOLLOW_LANE state, resuming the car on its previous path. If there are obstacles in the car's path (intersection hasn't cleared), the behavior planner falls back to the DECELERATE_TO_STOP state, and quickly reaches the STAY_STOPPED state since the car's velocity was low. It then restarts the timer, waiting to go back to FOLLOW_LANE.

-

Velocity planning

- The velocity planner operates in three modes: tracking a nominal speed, following a lead car, and decelerating to a stop. In all modes, the planner outputs the velocity the car should track at each iteration, considering the dynamics of the car and the road conditions, but does not actually drive the car. The output of the planner represents the expected velocity of the car at each iteration, and tracking this velocity is the responsibility of the PID longitudinal and lateral controllers implemented in the first course of this specialization.

- To track a nominal speed along a stretch of open road, the planner computes a trapezoidal velocity profile between the car's current speed and the speed limit of that road, with the ramp rate given by the vehicle's maximum comfortable acceleration. When the car is traveling at the speed limit, the planner continues to output the speed limit as the expected velocity.

- Decelerating to a stop is similar, with a trapezoidal velocity profile between the car's current speed and 0, with the ramp rate determined by the vehicle's maximum comfortable braking deceleration.

- To follow a lead car, the planner takes in the vehicle's current velocity and the lead car's position to calculate the time gap \(t_g\) between the lead car and itself. It also estimates the lead car's velocity relative to the ego vehicle, by tracking its displacement over the past few iterations, and sets the minimum of that velocity and the speed limit as the target velocity for the time \(t_g\). It generates a trapezoidal velocity profile between the ego vehicle's current speed and that target velocity, again limited by the vehicle's physical limitations on comfortable acceleration and deceleration.

Ant Pathfinding

About

Inspired by Ant and Slime Simulations by Sebastian Lague, this simulation models a colony of ants finding an optimal path between randomly placed food sources, using pseudorandom exploration and guided by the pheromone trails laid by previous generations.

Technologies Used

- Python - used to code the whole project

- numpy - to easily calculate distances using vector norms

- matplotlib - to run and display the animation

Technical Specifics